On the issue of calibration accuracy and orthogonality: I was working with a student today on the probe and after we loaded his samples into the instrument and looked at them a bit I realized we should have scanned them so we could use PictureSnapApp to navigate them. But the student said, no worries, he has images he took with his cell phone, so OK, we'll use those.

But right away we noticed that although he had indicated the "up" direction on his samples, which we had used to load them in the sample holder, his photos were pretty rotated with respect to the samples in the instrument, around 20 degrees rotation.

But we went ahead and did the two point calibration, and the calibration accuracy error was large, as expected (around 30-40%), but we then did a third calibration point to deal with that. So now we can navigate the sample just fine, but the calibration accuracy really didn't change that much. It went from around 40% to 30%. And even more telling, the current FOV rectangle didn't have the expected aspect ratio for this instrument.

But again, the PictureSnapApp sample navigation is working fine. Then it occurred to me, DOH!, that on a sample which is rotated with respect to the image, the FOV should not be drawn orthogonally, but instead, should be drawn rotated! That is, because the image is rotated, the FOV (and sub images for that matter) should be rotated too!

Then I realized (DOH!, DOH!) that this is why the calibration accuracy was so bad. We're simply calculating ABS((xdistance - ydistance) / xdistance). That is why the FOV has the wrong aspect ratio on an image that is rotated with respect to the sample! What we really need to do is to calculate the stage positions for the rotated FOV!

Or one can simply rotate the image until it matches the sample in the instrument (of course it probably would be easier to load the darn sample in the same orientation as the image to begin with!). Here is an example with the sample loaded in the instrument and rotated about 20 or 30 degrees to the image:

As one can see, the aspect ratio which should be 1.333 or 4:3, is not at all right. Also the calculated orthogonal accuracy is 46% which is way high, but completely to be expected in a rotated image Then we loaded in a copy of the image which the student then rotated on his phone, and then we re-calibrated the image:

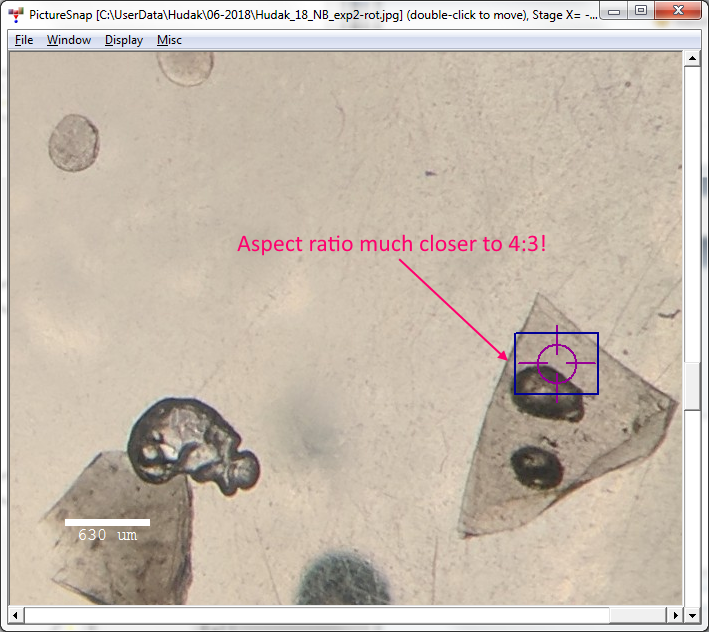

Now one can see that the FOV aspect ratio is much closer to 4:3. And the calibration accuracy is 1.47%. Much better!

Bottom line:

1. None of this applies to samples which cannot be rotated. For example, petrographics slides.

2. None of this matters if one only needs to navigate the sample. The stage location of points is always correct. But the displayed FOV (blue rectangle) aspect ratio, may appear distorted depending on the degree of rotation from the sample to the image.

3. If one is using FOV annotations or sub images from the Image Locator feature, then yes, one should rotate the image, or better still, just be sure to load the sample in the same (or close to) the orientation of the image.

4. Donovan is going to have to take a closer look at the issue of rotated images (and the displayed FOV graphic and FOV annotations). Calculating and drawing the rotated FOVs is easy once one knows the degree of rotation of the sample. In fact, with three calibration points this rotation falls nicely out of the calculated rotation matrix, but with two points... he will have to think about that a bit. Another difficulty will be with rendering the sub images in the image locator feature properly rotated.