We modified the TestEDS app a bit to only display results for only the traditional (single term), Willis (two term) and the new six term expression dead time expressions. And we increased the observed count rates to 300K cps and also added output of the predicted (dead time corrected) count rates as seen here:

Note that this version of TestEDS.exe is available in the latest release of Probe for EPMA (using the Help menu).

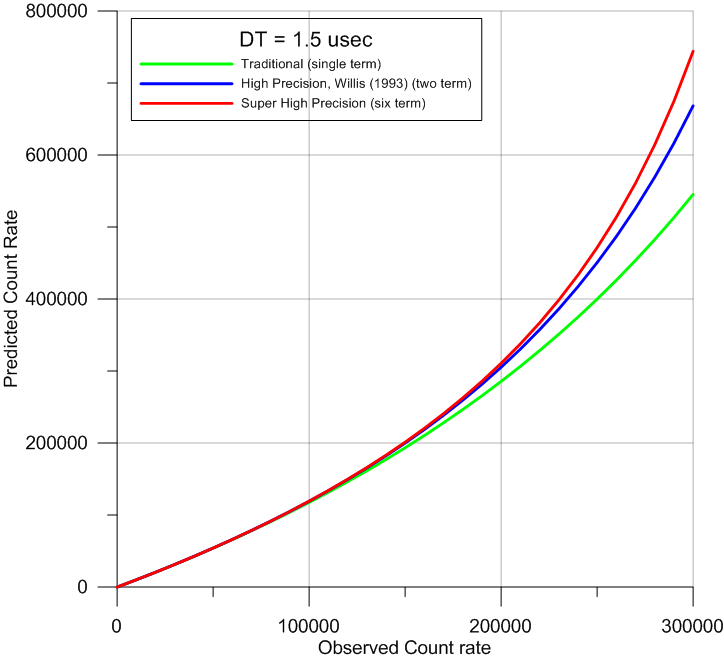

Now if instead of plotting the ratio of the observed to predicted count rates on the Y axis, we instead plot the predicted count rates themselves, we can see this plot:

Note that unlike the ratio plot, all three of the dead time correction expressions show curved lines. This is what I meant when I stated earlier that it depends on how the data is plotted.

Note also that at true (corrected) count rates around 400 to 500K cps we are seeing differences in the predicted intensities between the traditional expression and the new six term expression of around 10 to 20%!

To test the six term expression we might for example measure our primary Ti metal standard at say 30 nA, and then a number of secondary standards (TiO2 in this case) at different beam currents, and then plot the k-ratios for a number of spectrometers first using the traditional dead time correction expression:

Note spectrometer 3 (green symbols) using a PETL crystal. Next we plot the same data, but this time using the new six term dead time correction expression:

The low count rate spectrometers are unaffected, but the high intensities from the large area Bragg crystal benefit significantly in accuracy.

I can imagine a scenario where one is measuring one or two major elements and 3 or 4 minor or trace elements, using 5 spectrometers. The analyst measures all the primary standards at moderate beam currents, but in order to get decent sensitivity on the minor/trace elements the analyst selects a higher beam current for the unknowns.

Of course one can use two different beam conditions for each set of elements, but that would take considerably longer. Now we can do our major and minor elements together using higher beam currents and not lose accuracy.

I've always mentioned that for trace elements, background accuracy is more important than matrix corrections, but that's only because our matrix corrections are usually accurate to 2% relative or so. Now that we know that we can see 10 or 20% relative errors in our dead time corrections at high beam currents, I'm going to modify that now and say, that the dead time corrections might be another important source of error for trace and minor elements if one is using the traditional (single term) dead time expression at high beam currents.

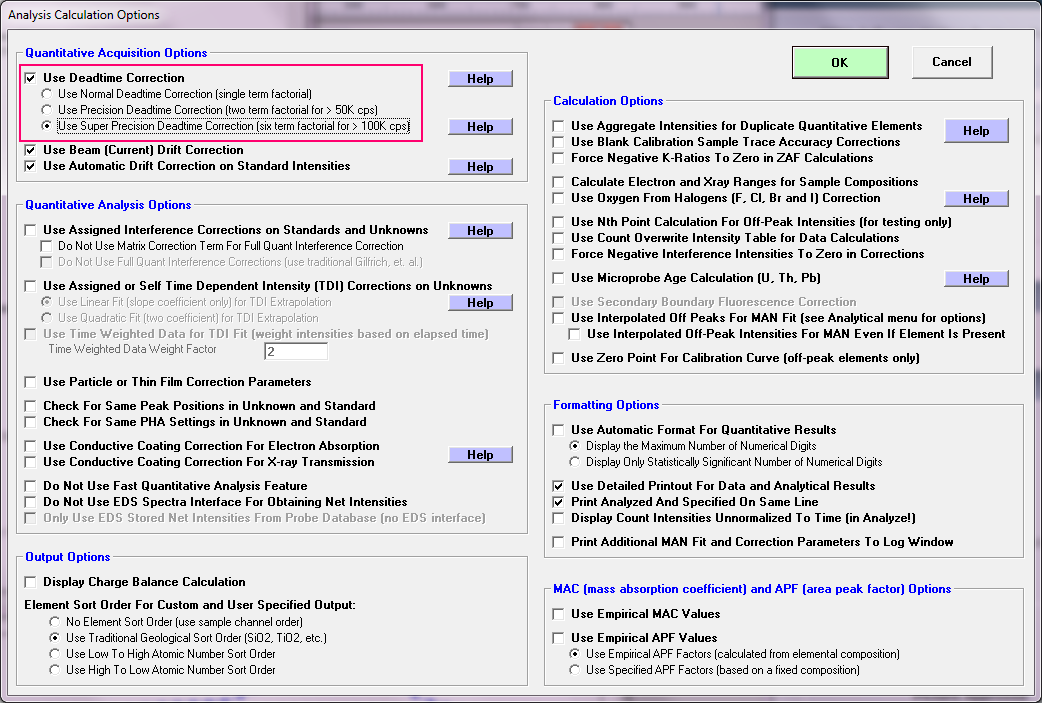

The good news is that the six term expression has essentially no effect at low beam currents, so simply select the "super high" precision expression as your default and you're good to go!

Test it for yourself using the constant k-ratio method and feel free to share your results here.