I am not asking you to restate your argument.

I am asking to you answer the question: do all four dead time correction equations produce the same results at low count rates as demonstrated in this graph?

In fact, they do produce similar corrections at low count rates, though they only give exactly the same result when the count rate is zero.

Thank-you!

In fact at 1.5 usec, they (the traditional vs. logarithmic expressions) produce results that are the same within 1 part in 10,000,000 at 1000 cps, 1 part in 100,000 at 10K cps and 1 part in 10,000 at 20K cps. So much for your claims that the traditional expression "substantially outperforms the logarithmic expression at low count rates"!

And do you know why they start diverging at a few tens of thousands of cps? Because the traditional expression is not handling multiple photon coincidence, which Monte Carlo modeling confirms are due to these relatively infrequent multiple photon events at these relatively low count rates. And as you have previously admitted already, at higher count rates, the traditional expression fails even worse.

But this is not necessarily the source of the problems with your modeling. Let me respond with another plot with more appropriate scaling; it pertains to my correction constant determined by linear regression for channel 4/TAPJ/Si for the region in which the linear model corrects the data well. If my point is not clear, then please reread my lengthy post above, especially the long paragraph:

Since you don't show us the actual data (why is that?), I can't tell if you are being disingenuous or just honestly not understanding what you are doing. You apparently want us to accept your claim that the data are "corrected well". So let's just accept that for now because I'm going to assume you are arguing in good faith.

The real problem is that you show us that both expressions at 1.07 usec yield very similar slopes. Of course they would wouldn't they, as you finally admitted above. But then you show us another slope (blue line) using the logarithmic expression at 1.19 usec (though strangely enough you don't also show us the traditional expression at 1.19 usec, why is that?).

In fact it's even stranger that you decided to show us the logarithmic expression using a *higher" dead time constant, because if you thought for even a minute about this you would realize that

when correcting for both single and multiple photon coincidence (using the logarithmic expression), the dead time constant must be (very) slightly decreased, not increased (compared to the traditional expression)!

This is because the traditional expression does not account for multiple photon coincidence and therefore when regressing intensity data to a straight line, it starts to be biased towards higher dead time values than it should be, when including count rates above 20 to 30K cps or so. This small fact is what you have been overlooking this whole time.

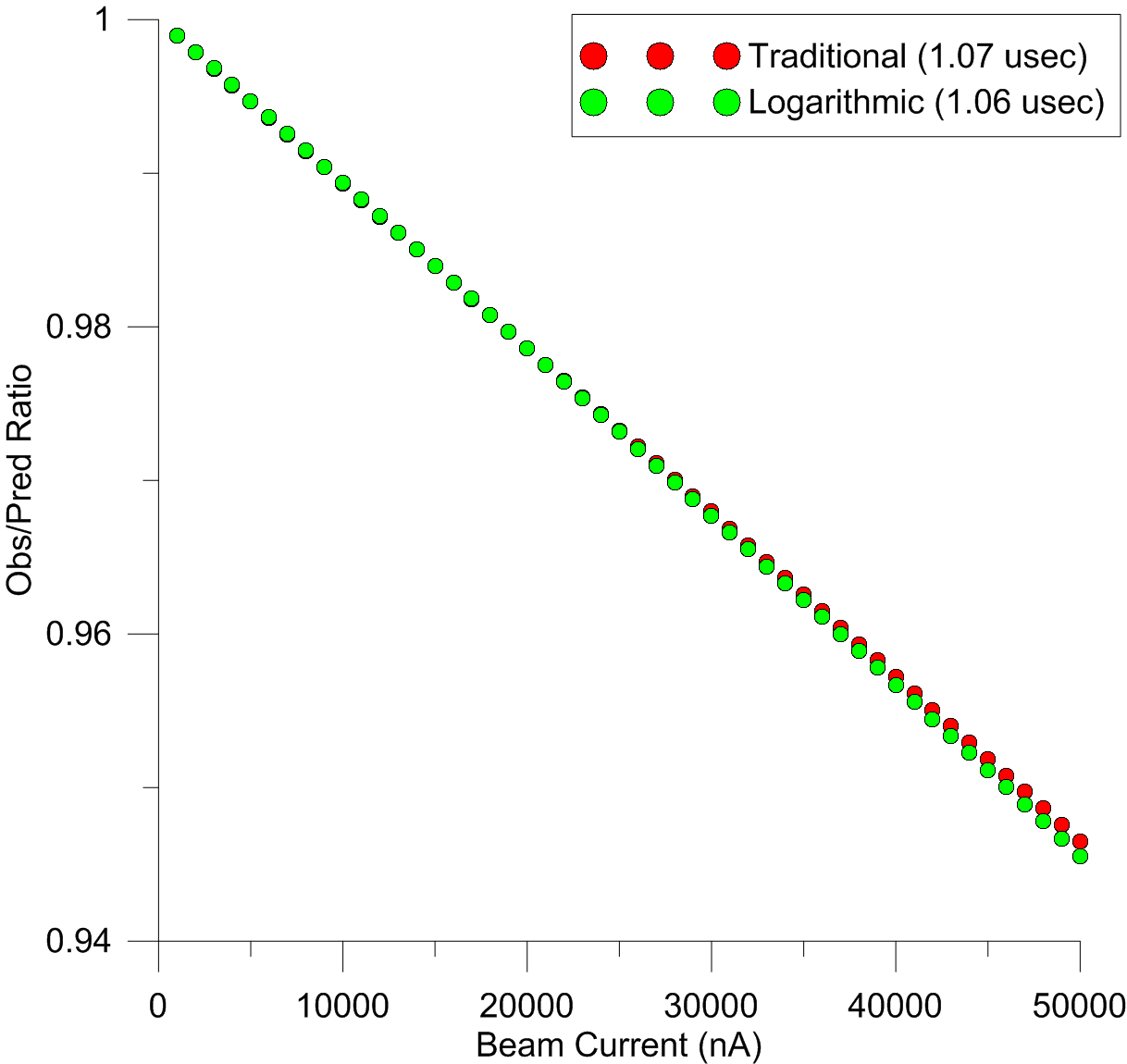

Please plot the traditional expression at 1.07 usec, and the logarithmic expression at 1.06 usec, you know, 0.01 usec different, and let us know what you see! Awww nevermind, here it is for you:

Note nearly identical response until we get to 30K cps or so. So it's strange that you choose to not only change the dead time constant for the logarithmic expression by a huge amount, but also in exactly the *wrong direction*... so is this an honest mistake or what? Sorry, but I really have to ask.

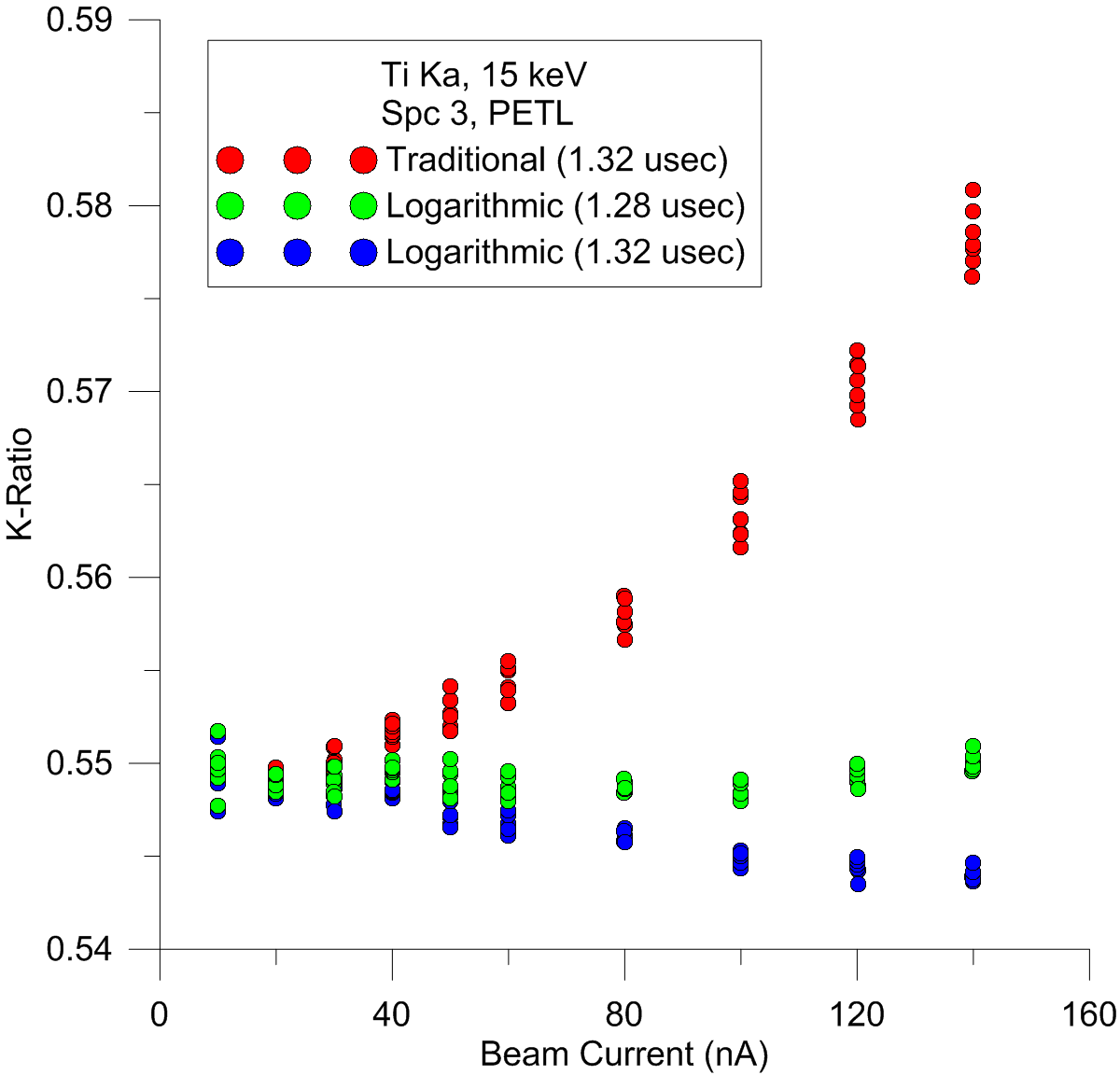

I still think actual EPMA data shows these differences quite well (and especially well using the constant k-ratio method, as I will be writing about next in constant k-ratio topic). So I will leave you with this plot which clearly shows that both expressions yield statistically identical results at 10 nA (15K cps on TiO2), but the traditional method visibly starts lose accuracy at around 30 nA (45K cps) and the wheels are already coming off around 40 na (60K cps):

Again, please note that the dead time constant must be *reduced* not increased, when correcting for multiple coincidence photon events exactly as one would expect. Maybe you need to answer this question next:

Do you agree that one (ideally) should obtain the same k-ratio over a range of beam currents, if the dead time correction is being properly applied?